The Evolution of Graphics Cards: From Simple Chips to AI-Powered Machines

Graphics cards, or GPUs, have come a long way since they were first introduced. They started as simple tools to display images on screens and have now become powerful processors that help with gaming, artificial intelligence (AI), and more. In this article, we will explore the history of graphics cards, their current role, and what the future holds for this important technology.

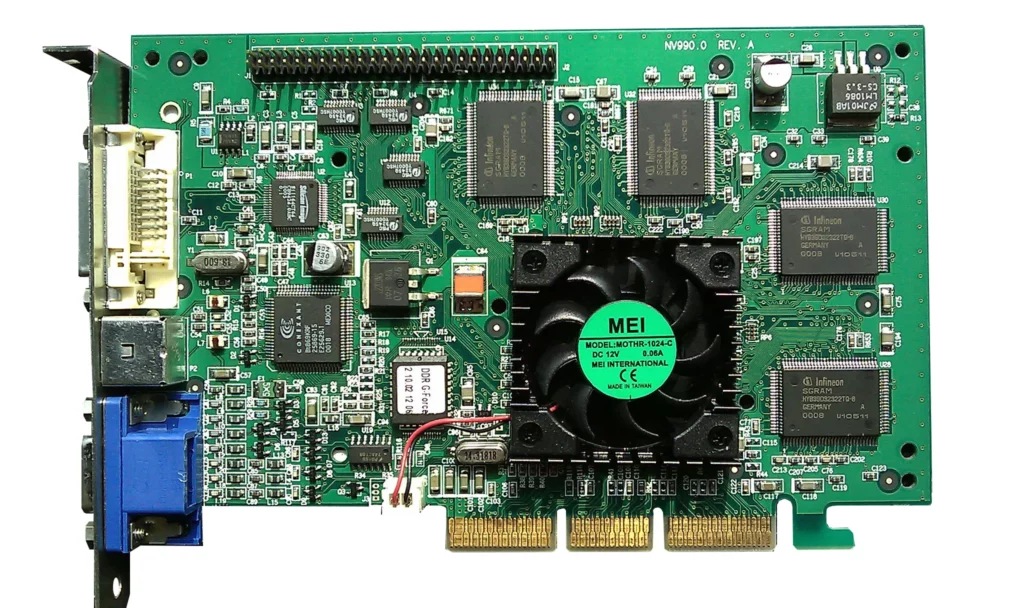

The Early Days: Simple Beginnings

In the late 1980s and early 1990s, the first graphics cards appeared. Their main job was to help the computer’s CPU (Central Processing Unit) by taking over the work of handling visuals. These early GPUs were simple and mainly worked on 2D graphics, such as showing windows and basic shapes on the screen. Examples of these early graphics cards include IBM’s Professional Graphics Controller and the VGA standard. They made the visual experience on personal computers smoother and clearer.

The 3D Revolution: The Rise of Gaming

The mid-1990s changed everything for graphics cards, especially with the rise of 3D games. Gamers wanted more realistic graphics, and this led to the creation of specialized 3D GPUs. NVIDIA, a company founded in 1993, released the GeForce 256, considered the first true GPU. It could handle complex tasks like lighting and transforming objects in 3D spaces, making games look more lifelike.

This era also saw tough competition between NVIDIA and ATI (now AMD), both companies pushing to improve 3D graphics for games like Quake, Unreal, and Half-Life, which became hugely popular.

Present Day: GPUs Are More Than Just for Gaming

Today’s graphics cards do much more than just power video games. They are now used in many fields, such as artificial intelligence (AI), cryptocurrency mining, and high-performance computing. One of the biggest advancements is ray tracing, a technology that makes light in games behave more realistically, creating stunning visuals. This was once only possible in Hollywood movies but can now be seen in home gaming systems.

Modern GPUs also support AI tasks, with special cores designed to handle deep learning, which is a key part of many AI projects. This makes GPUs essential not only for gaming but also for researchers and tech companies working with AI.

The Future of Graphics Cards

The future of graphics cards looks exciting. Several trends will shape the next generation of GPUs:

- Better AI Integration: Future GPUs will become even smarter with AI technology, allowing them to make real-time adjustments to graphics and create more realistic experiences.

- Quantum Computing: Although still new, quantum computing could change how GPUs work, enabling them to solve problems that today’s technology cannot handle.

- Cloud Gaming: Cloud gaming services, like NVIDIA GeForce Now and Google Stadia, are using powerful GPUs in remote data centers to stream games to any device. This means gamers don’t need to buy expensive hardware anymore.

- Energy Efficiency: Future GPUs will focus on using less energy, which is better for the environment, while still delivering top performance.

Conclusion: The Ever-Changing World of GPUs

Graphics cards have evolved from simple tools to display images into powerful machines that are essential for gaming, AI, and much more. As technology continues to grow, GPUs will lead the way in innovation, making the future of graphics cards as exciting as their past.